.gif)

During the Search On event, Google shared how machine learning assists the company to create search experiences better and more logical. Read on! |

| Check out the planned improvements on Google Search |

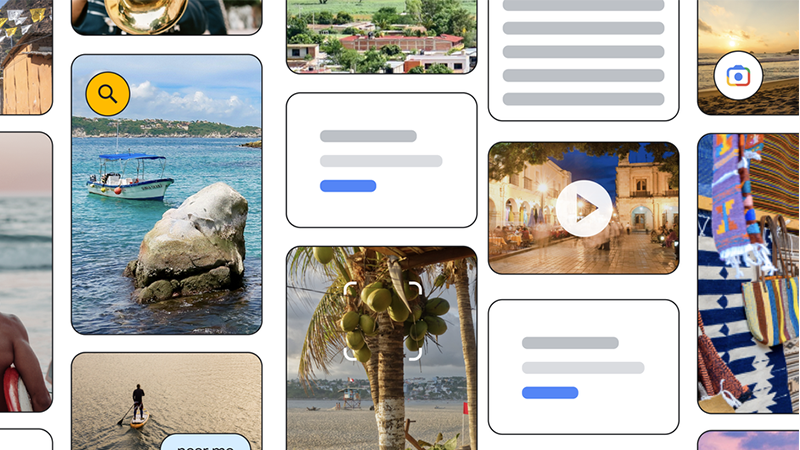

Google Search improves visual experience, intro new features

|

| Google Search will now be smarter and will utilize machine learning more |

Machine Learning helps Google in improving search experiences to mirror how people make sense of the world around them. They can assist users in gathering and exploring data in novel ways by analyzing information in its various forms, including text, images, and the actual world. Google is improving visual search by making it more user-friendly and natural.

This year, Google developed Multisearch, which improved the naturalness of visual search. When using Multisearch, you can screenshot an image or ask a question while pointing at a particular object. Additionally, Multisearch is accessible in English anywhere in the world and will soon be available in more than 70 languages.'

Users of the new "Multisearch Near Me" function can take a picture of food or an item to find it nearby. You may find local businesses using this new method of searching, whether you want to patronize your local store or you really need something now. This September, "Multisearch Near Me" will debut in the United States.

Beyond text, Google Lens can translate photos. Google interprets visual text 1 billion times each month into more than 100 languages. It seems appropriate given that advances in machine learning can mix translated text with intricate images.

As a result of improved machine learning models, this can be done in 100 milliseconds. It seems appropriate given that machine learning advancements can merge complicated visuals with translated text. It can do this in 100 milliseconds thanks to improved machine learning models.

Users may now point their camera at a poster in another language to see translated text superimposed on the images thanks to this new update. The Google Lens update will be released toward the end of the year.

Google is giving users access to some of its most practical features with the Google App for iOS too. Users can now find shortcuts to screenshot shopping, text translation using your camera, and hum search under the search bar.

Improved algorithms for exploring information

Google's algorithm will also be improved in terms of how it processes information. Users can search for information using fewer words or even images and Google will, in theory, be able to understand and provide relevant search results.

Users can look at information structured in a way that makes sense to them, such as by digging deeper into a topic as it unfolds or by discovering fresh perspectives. Google will soon make a faster search option available. According to the company, a Google search might deliver pertinent results before you finish typing a question.

The new search experiences from Google enable more natural topic exploration. As you enter, it will offer keywords or subtopics to aid in the creation of your query. Google can help you focus your search on more pertinent results, such as the "best cities in Mexico for families," if you wish to travel to Mexico.

Google makes it simple to research a subject by emphasizing pertinent and useful content, including free online resources. You can find visitor-submitted short films and visual stories about cities, as well as advice on what to see and do, how to get there, and other travel-related information.

You will soon be presented with topics to help you go further or find a different direction according to Google's study of how people search. You can add or remove topics when zooming.

What do you guys think?

.gif)

.gif)

Post a Comment